URNights

Night Assistant

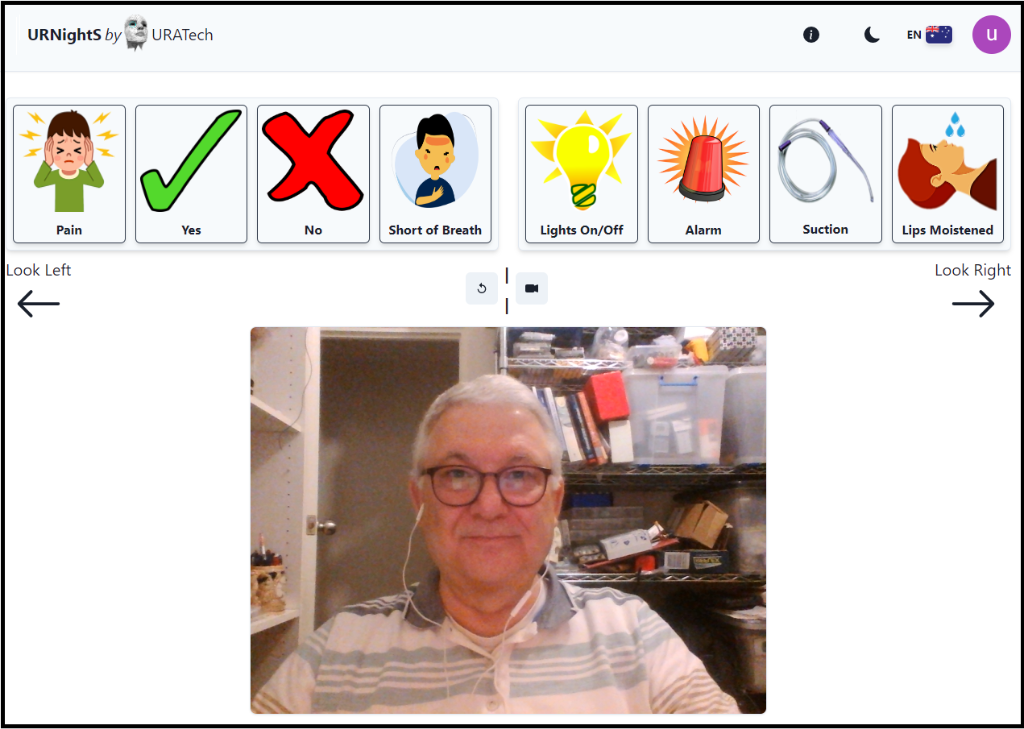

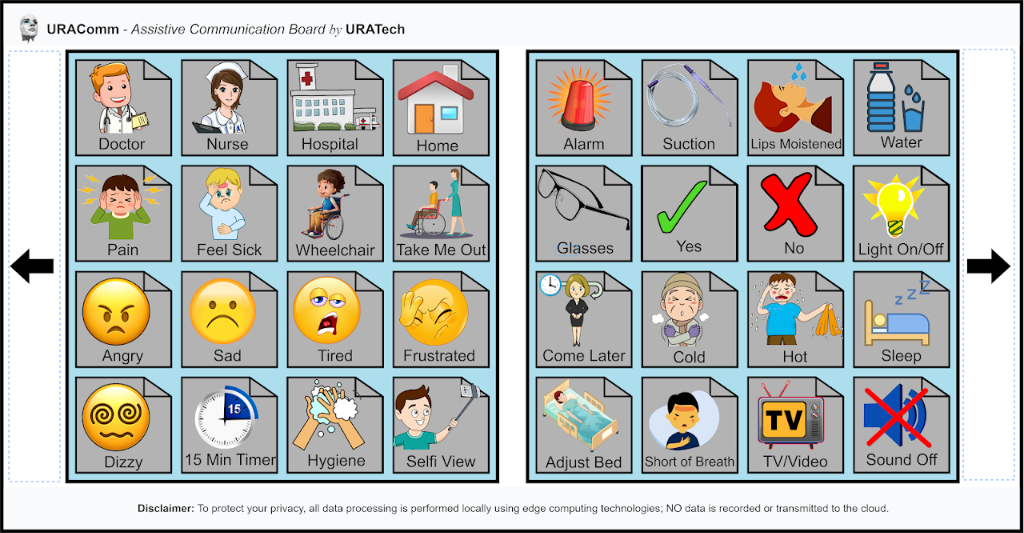

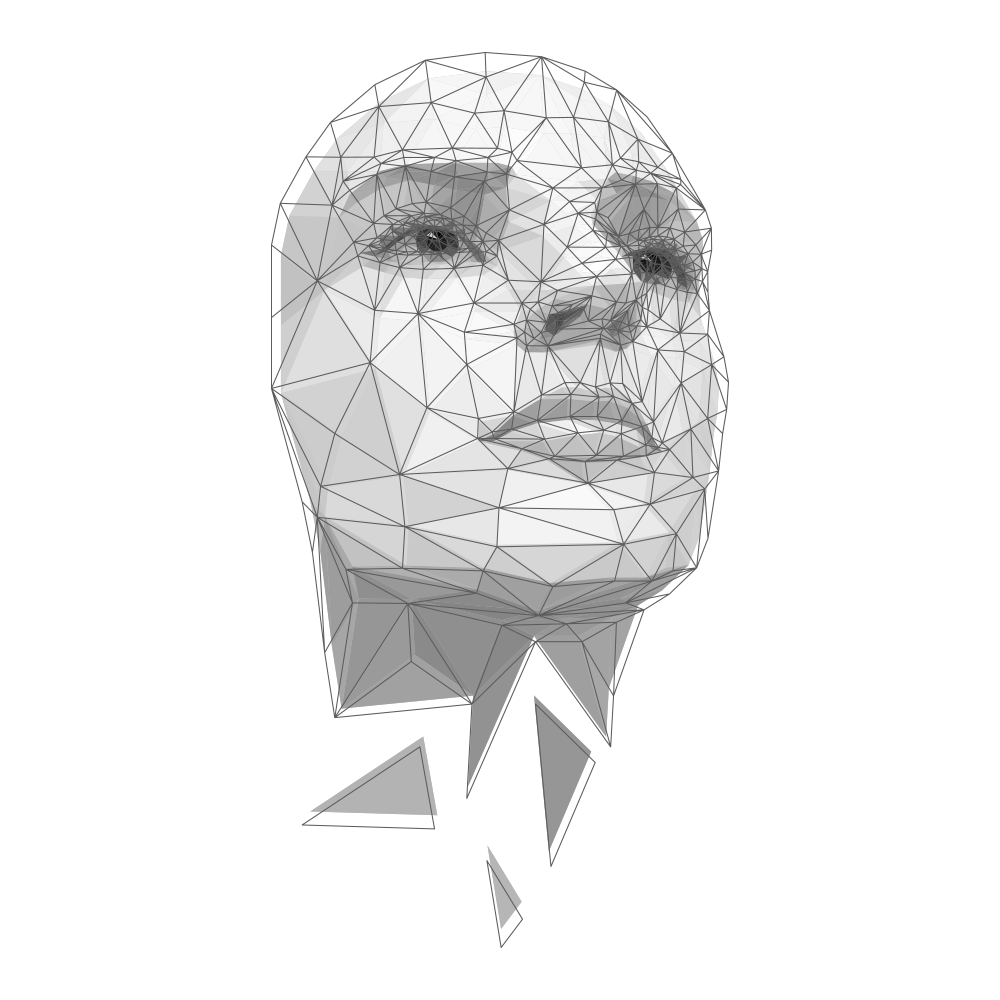

URNights enables you to call for assistance in seconds. URNights can be controlled by gaze, blinking, head motion, and facial gestures. The smart night assistance system continuously monitors and analyses your facial muscles and eye movements via a specialised camera. URNights works even in the dark.

● The Challenge

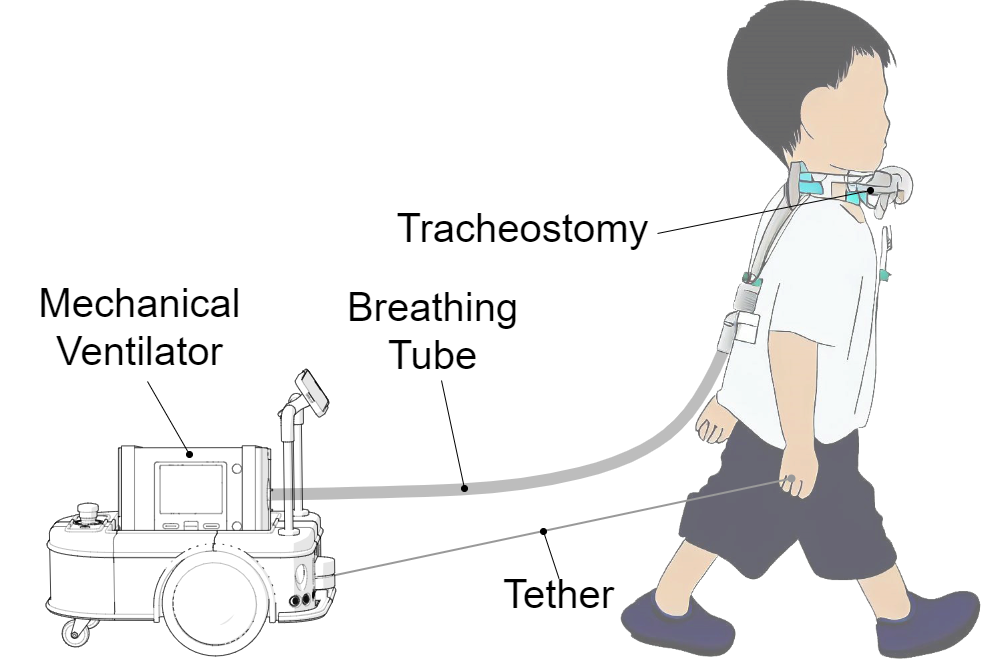

Patients in the advanced stages of Amyotrophic Lateral Sclerosis (ALS), Spinal Muscular Atrophy (SMA), and other similar genetic or Motor Neuron Disorders (MND) are unable to speak or move their limbs. Most of these patients are on a permanent mechanical ventilator and breathe through a tracheostomy tube in their throat. Some patients with spinal cord injuries may also suffer from similar problems. Imagine for a moment that you are a conscious person with Severe Speech and Motor Impairment (SSMI), that is, unable to speak or move. How can you ask for help with any of your needs in the middle of the night? Unfortunately, patients in this situation cannot seek help in the middle of the night. They must wait until their caregivers wake up in the morning.

● Our Solution

URNights, night assistant by URATech, is developed to address this problem. Patients with severe speech and motor impairment can now call for assistance in seconds, ensuring they never have to wait helplessly through the night again.